维基百科语料中的词语相似度探索

之前写过《中英文维基百科语料上的Word2Vec实验》,近期有不少同学在这篇文章下留言提问,加上最近一些工作也与Word2Vec相关,于是又做了一些功课,包括重新过了一遍Word2Vec的相关资料,试了一下gensim的相关更新接口,google了一下"wikipedia word2vec" or "维基百科 word2vec" 相关的英中文资料,发现多数还是走得这篇文章的老路,既通过gensim提供的维基百科预处理脚本"gensim.corpora.WikiCorpus"提取维基语料,每篇文章一行文本存放,然后基于gensim的Word2Vec模块训练词向量模型。这里再提供另一个方法来处理维基百科的语料,训练词向量模型,计算词语相似度(Word Similarity)。关于Word2Vec, 如果英文不错,推荐从这篇文章入手读相关的资料: Getting started with Word2Vec 。

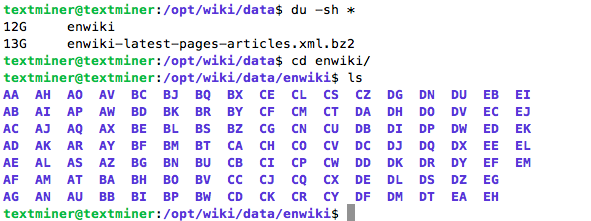

这次我们仅以英文维基百科语料为例,首先依然是下载维基百科的最新XML打包压缩数据,在这个英文最新更新的数据列表下:https://dumps.wikimedia.org/enwiki/latest/ ,找到 "enwiki-latest-pages-articles.xml.bz2" 下载,这份英文维基百科全量压缩数据的打包时间大概是2017年4月4号,大约13G,我通过家里的电脑wget下载大概花了3个小时,电信100M宽带,速度还不错。

接下来就是处理这份压缩的XML英文维基百科语料了,这次我们使用WikiExtractor:

WikiExtractor.py is a Python script that extracts and cleans text from a Wikipedia database dump.

The tool is written in Python and requires Python 2.7 or Python 3.3+ but no additional library.

WikiExtractor是一个Python 脚本,专门用于提取和清洗Wikipedia的dump数据,支持Python 2.7 或者 Python 3.3+,无额外依赖,安装和使用都非常方便:

安装:

cd wikiextractor/

sudo python setup.py install

使用:

......

INFO: 53665431 Pampapaul

INFO: 53665433 Charles Frederick Zimpel

INFO: Finished 11-process extraction of 5375019 articles in 8363.5s (642.7 art/s)

这个过程总计花了2个多小时,提取了大概537万多篇文章。关于我的机器配置,可参考:《深度学习主机攒机小记》

提取后的文件按一定顺序切分存储在多个子目录下:

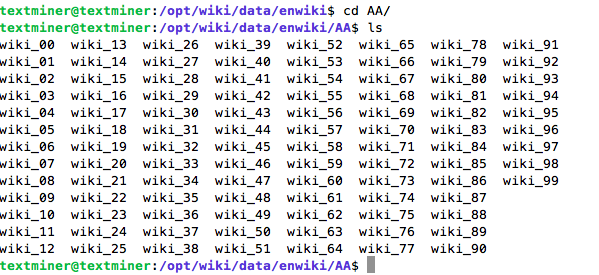

每个子目录下的又存放若干个以wiki_num命名的文件,每个大小在1M左右,这个大小可以通过参数 -b 控制:

我们看一下wiki_00里的具体内容:

Anarchism

Anarchism is a political philosophy that advocates self-governed societies based on voluntary institutions. These are often described as stateless societies, although several authors have defined them more specifically as institutions based on non-hierarchical free associations. Anarchism holds the state to be undesirable, unnecessary, and harmful.

...

Criticisms of anarchism include moral criticisms and pragmatic criticisms. Anarchism is often evaluated as unfeasible or utopian by its critics.

</doc>

<doc id="25" url="https://en.wikipedia.org/wiki?curid=25" title="Autism">

Autism

Autism is a neurodevelopmental disorder characterized by impaired social interaction, verbal and non-verbal communication, and restricted and repetitive behavior. Parents usually notice signs in the first two years of their child's life. These signs often develop gradually, though some children with autism reach their developmental milestones at a normal pace and then regress. The diagnostic criteria require that symptoms become apparent in early childhood, typically before age three.

...

</doc>

...

每个wiki_num文件里又存放若干个doc,每个doc都有相关的tag标记,包括id, url, title等,很好区分。

这里我们按照Gensim作者提供的word2vec tutorial里"memory-friendly iterator"方式来处理英文维基百科的数据。代码如下,也同步放到了github里:train_word2vec_with_gensim.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# Author: Pan Yang (panyangnlp@gmail.com)

# Copyright 2017 @ Yu Zhen

import gensim

import logging

import multiprocessing

import os

import re

import sys

from pattern.en import tokenize

from time import time

logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s',

level=logging.INFO)

def cleanhtml(raw_html):

cleanr = re.compile('<.*?>')

cleantext = re.sub(cleanr, ' ', raw_html)

return cleantext

class MySentences(object):

def __init__(self, dirname):

self.dirname = dirname

def __iter__(self):

for root, dirs, files in os.walk(self.dirname):

for filename in files:

file_path = root + '/' + filename

for line in open(file_path):

sline = line.strip()

if sline == "":

continue

rline = cleanhtml(sline)

tokenized_line = ' '.join(tokenize(rline))

is_alpha_word_line = [word for word in

tokenized_line.lower().split()

if word.isalpha()]

yield is_alpha_word_line

if __name__ == '__main__':

if len(sys.argv) != 2:

print "Please use python train_with_gensim.py data_path"

exit()

data_path = sys.argv[1]

begin = time()

sentences = MySentences(data_path)

model = gensim.models.Word2Vec(sentences,

size=200,

window=10,

min_count=10,

workers=multiprocessing.cpu_count())

model.save("data/model/word2vec_gensim")

model.wv.save_word2vec_format("data/model/word2vec_org",

"data/model/vocabulary",

binary=False)

end = time()

print "Total procesing time: %d seconds" % (end - begin)

注意其中的word tokenize使用了pattern里的英文tokenize模块,当然,也可以使用nltk里的word_tokenize模块,做一点修改即可,不过nltk对于句尾的一些词的work tokenize处理的不太好。另外我们设定词向量维度为200, 窗口长度为10, 最小出现次数为10,通过 is_alpha() 函数过滤掉标点和非英文词。现在可以用这个脚本来训练英文维基百科的Word2Vec模型了:

2017-04-22 14:31:04,703 : INFO : collecting all words and their counts

2017-04-22 14:31:04,704 : INFO : PROGRESS: at sentence #0, processed 0 words, keeping 0 word types

2017-04-22 14:31:06,442 : INFO : PROGRESS: at sentence #10000, processed 480546 words, keeping 33925 word types

2017-04-22 14:31:08,104 : INFO : PROGRESS: at sentence #20000, processed 983240 words, keeping 51765 word types

2017-04-22 14:31:09,685 : INFO : PROGRESS: at sentence #30000, processed 1455218 words, keeping 64982 word types

2017-04-22 14:31:11,349 : INFO : PROGRESS: at sentence #40000, processed 1957479 words, keeping 76112 word types

......

2017-04-23 02:50:59,844 : INFO : worker thread finished; awaiting finish of 2 more threads 2017-04-23 02:50:59,844 : INFO : worker thread finished; awaiting finish of 1 more threads 2017-04-23 02:50:59,854 : INFO : worker thread finished; awaiting finish of 0 more threads 2017-04-23 02:50:59,854 : INFO : training on 8903084745 raw words (6742578791 effective words) took 37805.2s, 178351 effective words/s

2017-04-23 02:50:59,855 : INFO : saving Word2Vec object under data/model/word2vec_gensim, separately None

2017-04-23 02:50:59,855 : INFO : not storing attribute syn0norm

2017-04-23 02:50:59,855 : INFO : storing np array 'syn0' to data/model/word2vec_gensim.wv.syn0.npy

2017-04-23 02:51:00,241 : INFO : storing np array 'syn1neg' to data/model/word2vec_gensim.syn1neg.npy

2017-04-23 02:51:00,574 : INFO : not storing attribute cum_table

2017-04-23 02:51:13,886 : INFO : saved data/model/word2vec_gensim

2017-04-23 02:51:13,886 : INFO : storing vocabulary in data/model/vocabulary

2017-04-23 02:51:17,480 : INFO : storing 868777x200 projection weights into data/model/word2vec_org

Total procesing time: 44476 seconds

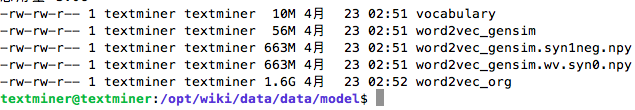

这个训练过程中大概花了12多小时,训练后的文件存放在data/model下:

我们来测试一下这个英文维基百科的Word2Vec模型:

textminer@textminer:/opt/wiki/data$ ipython

Python 2.7.12 (default, Nov 19 2016, 06:48:10)

Type "copyright", "credits" or "license" for more information.

IPython 2.4.1 -- An enhanced Interactive Python.

? -> Introduction and overview of IPython's features.

%quickref -> Quick reference.

help -> Python's own help system.

object? -> Details about 'object', use 'object??' for extra details.

In [1]: from gensim.models import Word2Vec

In [2]: en_wiki_word2vec_model = Word2Vec.load('data/model/word2vec_gensim')

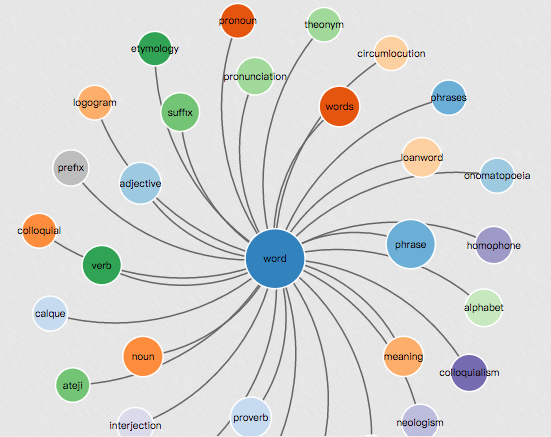

首先来测试几个单词的相似单词(Word Similariy):

word:

In [3]: en_wiki_word2vec_model.most_similar('word')

Out[3]:

[('phrase', 0.8129693269729614),

('meaning', 0.7311851978302002),

('words', 0.7010501623153687),

('adjective', 0.6805518865585327),

('noun', 0.6461974382400513),

('suffix', 0.6440576314926147),

('verb', 0.6319557428359985),

('loanword', 0.6262609958648682),

('proverb', 0.6240501403808594),

('pronunciation', 0.6105246543884277)]

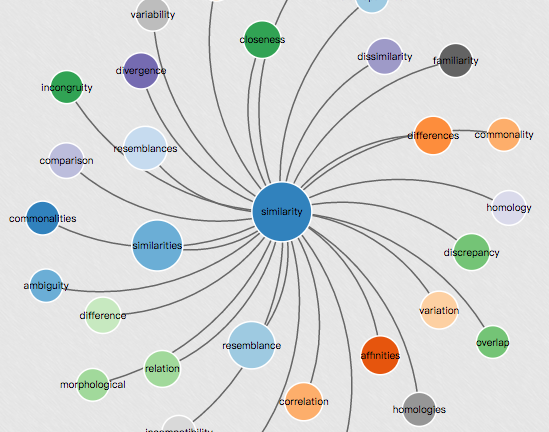

In [4]: en_wiki_word2vec_model.most_similar('similarity')

Out[4]:

[('similarities', 0.8517599701881409),

('resemblance', 0.786037266254425),

('resemblances', 0.7496883869171143),

('affinities', 0.6571112275123596),

('differences', 0.6465682983398438),

('dissimilarities', 0.6212711930274963),

('correlation', 0.6071442365646362),

('dissimilarity', 0.6062943935394287),

('variation', 0.5970577001571655),

('difference', 0.5928016901016235)]

nlp:

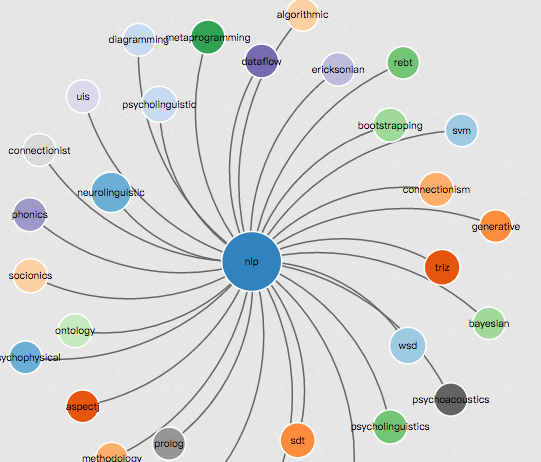

In [5]: en_wiki_word2vec_model.most_similar('nlp')

Out[5]:

[('neurolinguistic', 0.6698148250579834),

('psycholinguistic', 0.6388964056968689),

('connectionism', 0.6027182936668396),

('semantics', 0.5866401195526123),

('connectionist', 0.5865628719329834),

('bandler', 0.5837364196777344),

('phonics', 0.5733655691146851),

('psycholinguistics', 0.5613113641738892),

('bootstrapping', 0.559638261795044),

('psychometrics', 0.5555593967437744)]

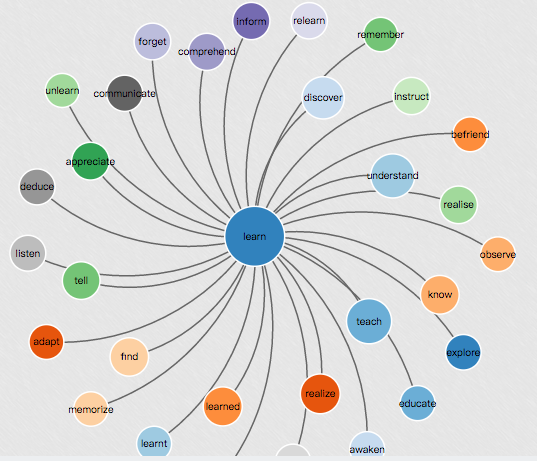

In [6]: en_wiki_word2vec_model.most_similar('learn')

Out[6]:

[('teach', 0.7533557415008545),

('understand', 0.71148681640625),

('discover', 0.6749690771102905),

('learned', 0.6599283218383789),

('realize', 0.6390970349311829),

('find', 0.6308424472808838),

('know', 0.6171890497207642),

('tell', 0.6146825551986694),

('inform', 0.6008728742599487),

('instruct', 0.5998791456222534)]

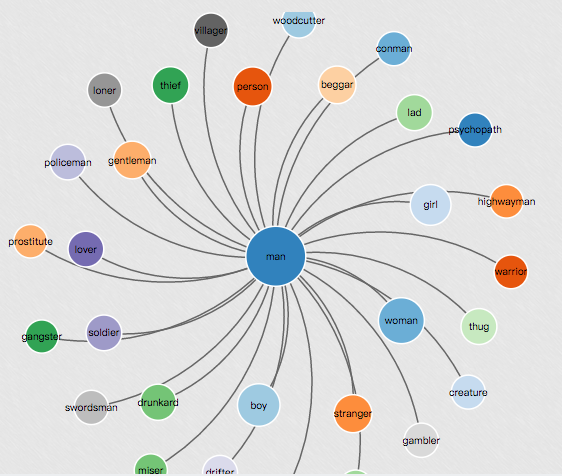

man:

In [7]: en_wiki_word2vec_model.most_similar('man')

Out[7]:

[('woman', 0.7243080735206604),

('boy', 0.7029494047164917),

('girl', 0.6441491842269897),

('stranger', 0.63275545835495),

('drunkard', 0.6136815547943115),

('gentleman', 0.6122575998306274),

('lover', 0.6108279228210449),

('thief', 0.609005331993103),

('beggar', 0.6083744764328003),

('person', 0.597919225692749)]

再来看看其他几个相关接口:

In [8]: en_wiki_word2vec_model.most_similar(positive=['woman', 'king'], negative=['man'], topn=1)

Out[8]: [('queen', 0.7752252817153931)]

In [9]: en_wiki_word2vec_model.similarity('woman', 'man')

Out[9]: 0.72430799548282099

In [10]: en_wiki_word2vec_model.doesnt_match("breakfast cereal dinner lunch".split())

Out[10]: 'cereal'

我把这篇文章的相关代码还有另一篇“中英文维基百科语料上的Word2Vec实验”的相关代码整理了一下,在github上建立了一个 Wikipedia_Word2vec 的项目,感兴趣的同学可以参考。

注:原创文章,转载请注明出处及保留链接“我爱自然语言处理”:http://www.52nlp.cn