博客备份方案

刚经历了服务器故障,导致博客内容大部分都丢失的窘境,我今天在重新搭建博客的时候,就顺便将对网站的备份方案进行了完善,主要是以下两点:

- 服务器提供商常态备份

- 核心服务器数据异地备份

第一点没什么好说的,因为我购买的是DigitalOcean家的机器,有个每个月2$的备份选项,直接开启就是了,第二个方案主要是将服务器上的内容异地备份到本地Minio(群晖Docker搭建的)上去了.

自动备份脚本

Rclone

Rclone的官方(https://rclone.org/)介绍如下:

Rclone 是一个命令行程序,用于管理云存储上的文件。它是云供应商 Web 存储界面的功能丰富的替代品。超过 40 种云存储产品支持 rclone,包括 S3 对象存储、企业和消费者文件存储服务以及标准传输协议。

在服务器(Linux/macOS/BSD)上使用以下命令即可快速安装rclone.

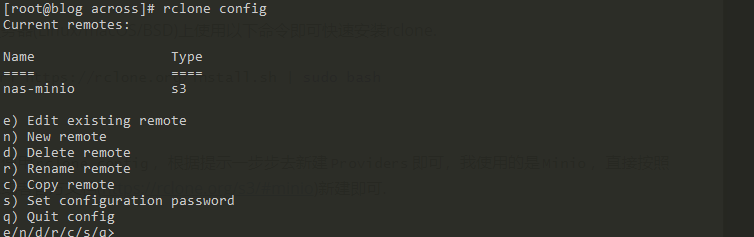

curl https://rclone.org/install.sh | sudo bash然后使用rclone config,根据提示一步步去新建Providers即可,我使用的是Minio,直接按照提示或者官方文档(https://rclone.org/s3/#minio)新建即可.

什么是Backup.sh

我在2019年的做过一次备份,我记得是当时是看到一篇文章,https://teddysun.com/469.html ,觉得挺好用,就随手试了一下。通过编写该脚本的作者介绍,有以下几个特点:

- 支持 MySQL/MariaDB/Percona 的数据库全量备份或选择备份;

- 支持指定目录或文件的备份;

- 支持加密备份文件(需安装 openssl 命令,可选);

- 支持上传至 Google Drive(需先安装 rclone 并配置,可选);

- 支持在删除指定天数本地旧的备份文件的同时,也删除 Google Drive 上的同名文件(可选)。

不过我觉得有个特点没写出来,就是对备份的数据进行了加密。

精简成适合自己的Backup.sh

因为我在自己本地的Nas上搭建了一个Minio服务,在使用的过程中发现有些ftp的功能不是我需要到,以及备份的目录功能,和我预期想要的不太一样。于是就自己进行了改造,改造完的脚本内容如下

(2021-11-23新增同步到Google Drive):

#!/usr/bin/env bash

# Copyright (C) 2013 - 2020 Teddysun <i@teddysun.com>

#

# This file is part of the LAMP script.

#

# LAMP is a powerful bash script for the installation of

# Apache + PHP + MySQL/MariaDB and so on.

# You can install Apache + PHP + MySQL/MariaDB in an very easy way.

# Just need to input numbers to choose what you want to install before installation.

# And all things will be done in a few minutes.

#

# Description: Auto backup shell script

# Description URL: https://teddysun.com/469.html

#

# Website: https://lamp.sh

# Github: https://github.com/teddysun/lamp

#

# You must to modify the config before run it!!!

# Backup MySQL/MariaDB datebases, files and directories

# Backup file is encrypted with AES256-cbc with SHA1 message-digest (option)

# Auto transfer backup file to Minio (need install rclone command) (option)

# Auto delete Minio's remote file (option)

[[ $EUID -ne 0 ]] && echo "Error: This script must be run as root!" && exit 1

########## START OF CONFIG ##########

# Encrypt flag (true: encrypt, false: not encrypt)

ENCRYPTFLG=true

# WARNING: KEEP THE PASSWORD SAFE!!!

# The password used to encrypt the backup

# To decrypt backups made by this script, run the following command:

# openssl enc -aes256 -in [encrypted backup] -out decrypted_backup.tgz -pass pass:[backup password] -d -md sha1

BACKUPPASS="123456"

# Directory to store backups

LOCALDIR="/opt/backups/"

# Temporary directory used during backup creation

TEMPDIR="/opt/backups/temp/"

# File to log the outcome of backups

LOGFILE="/opt/backups/backup.log"

# OPTIONAL:

# If you want to backup the MySQL database, enter the MySQL root password below, otherwise leave it blank

MYSQL_ROOT_PASSWORD=""

# Below is a list of MySQL database name that will be backed up

# If you want backup ALL databases, leave it blank.

MYSQL_DATABASE_NAME[0]=""

# Below is a list of files and directories that will be backed up in the tar backup

# For example:

# File: /data/www/default/test.tgz

# Directory: /data/www/default/test

BACKUP[0]=""

# Number of days to store daily local backups (default 7 days)

LOCALAGEDAILIES="7"

# Delete remote file from Googole Drive flag (true: delete, false: not delete)

DELETE_REMOTE_FILE_FLG=true

# Rclone remote name

RCLONE_MINIO_NAME=""

# Rclone remote name

RCLONE_GDRIVE_NAME=""

# Rclone remote buckets name

RCLONE_BUCKETS_NAME=""

# Rclone remote folder name (default "")

RCLONE_FOLDER=""

# Upload local file to Minio flag (true: upload, false: not upload)

RCLONE_FLG=true

########## END OF CONFIG ##########

# Date & Time

DAY=$(date +%d)

MONTH=$(date +%m)

YEAR=$(date +%C%y)

BACKUPDATE=$(date +%Y%m%d%H%M%S)

# Backup file name

TARFILE="${LOCALDIR}""$(hostname)"_"${BACKUPDATE}".tgz

# Encrypted backup file name

ENC_TARFILE="${TARFILE}.enc"

# Backup MySQL dump file name

SQLFILE="${TEMPDIR}mysql_${BACKUPDATE}.sql"

log() {

echo "$(date "+%Y-%m-%d %H:%M:%S")" "$1"

echo -e "$(date "+%Y-%m-%d %H:%M:%S")" "$1" >> ${LOGFILE}

}

# Check for list of mandatory binaries

check_commands() {

# This section checks for all of the binaries used in the backup

# Do not check mysql command if you do not want to backup the MySQL database

if [ -z "${MYSQL_ROOT_PASSWORD}" ]; then

BINARIES=( cat cd du date dirname echo openssl pwd rm tar )

else

BINARIES=( cat cd du date dirname echo openssl mysql mysqldump pwd rm tar )

fi

# Iterate over the list of binaries, and if one isn't found, abort

for BINARY in "${BINARIES[@]}"; do

if [ ! "$(command -v "$BINARY")" ]; then

log "$BINARY is not installed. Install it and try again"

exit 1

fi

done

# check rclone command

RCLONE_COMMAND=false

if [ "$(command -v "rclone")" ]; then

RCLONE_COMMAND=true

fi

}

calculate_size() {

local file_name=$1

local file_size=$(du -h $file_name 2>/dev/null | awk '{print $1}')

if [ "x${file_size}" = "x" ]; then

echo "unknown"

else

echo "${file_size}"

fi

}

# Backup MySQL databases

mysql_backup() {

if [ -z "${MYSQL_ROOT_PASSWORD}" ]; then

log "MySQL root password not set, MySQL backup skipped"

else

log "MySQL dump start"

mysql -u root -p"${MYSQL_ROOT_PASSWORD}" 2>/dev/null <<EOF

exit

EOF

if [ $? -ne 0 ]; then

log "MySQL root password is incorrect. Please check it and try again"

exit 1

fi

if [ "${MYSQL_DATABASE_NAME[@]}" == "" ]; then

mysqldump -u root -p"${MYSQL_ROOT_PASSWORD}" --all-databases > "${SQLFILE}" 2>/dev/null

if [ $? -ne 0 ]; then

log "MySQL all databases backup failed"

exit 1

fi

log "MySQL all databases dump file name: ${SQLFILE}"

#Add MySQL backup dump file to BACKUP list

BACKUP=(${BACKUP[@]} ${SQLFILE})

else

for db in ${MYSQL_DATABASE_NAME[@]}; do

unset DBFILE

DBFILE="${TEMPDIR}${db}_${BACKUPDATE}.sql"

mysqldump -u root -p"${MYSQL_ROOT_PASSWORD}" ${db} > "${DBFILE}" 2>/dev/null

if [ $? -ne 0 ]; then

log "MySQL database name [${db}] backup failed, please check database name is correct and try again"

exit 1

fi

log "MySQL database name [${db}] dump file name: ${DBFILE}"

#Add MySQL backup dump file to BACKUP list

BACKUP=(${BACKUP[@]} ${DBFILE})

done

fi

log "MySQL dump completed"

fi

}

start_backup() {

[ "${#BACKUP[@]}" -eq 0 ] && echo "Error: You must to modify the [$(basename $0)] config before run it!" && exit 1

log "Tar backup file start"

tar -zcPf ${TARFILE} ${BACKUP[@]}

if [ $? -gt 1 ]; then

log "Tar backup file failed"

exit 1

fi

log "Tar backup file completed"

# Encrypt tar file

if ${ENCRYPTFLG}; then

log "Encrypt backup file start"

openssl enc -aes256 -in "${TARFILE}" -out "${ENC_TARFILE}" -pass pass:"${BACKUPPASS}" -md sha1

log "Encrypt backup file completed"

# Delete unencrypted tar

log "Delete unencrypted tar file: ${TARFILE}"

rm -f ${TARFILE}

fi

# Delete MySQL temporary dump file

for sql in $(ls ${TEMPDIR}*.sql); do

log "Delete MySQL temporary dump file: ${sql}"

rm -f ${sql}

done

if ${ENCRYPTFLG}; then

OUT_FILE="${ENC_TARFILE}"

else

OUT_FILE="${TARFILE}"

fi

log "File name: ${OUT_FILE}, File size: $(calculate_size ${OUT_FILE})"

}

# Transfer backup file to Minio

# If you want to install rclone command, please visit website:

# https://rclone.org/downloads/

rclone_upload_to_nas() {

if ${RCLONE_FLG} && ${RCLONE_COMMAND}; then

[ -z "${RCLONE_MINIO_NAME}" ] && log "Error: RCLONE_MINIO_NAME can not be empty!" && return 1

log "Tranferring backup file: ${OUT_FILE} to Minio"

rclone copy ${OUT_FILE} ${RCLONE_MINIO_NAME}:${RCLONE_BUCKETS_NAME}/${RCLONE_FOLDER} >> ${LOGFILE}

if [ $? -ne 0 ]; then

log "Error: Tranferring backup file: ${OUT_FILE} to Minio failed"

return 1

fi

log "Tranferring backup file: ${OUT_FILE} to Minio completed"

fi

}

# Transfer backup file to Google Drive

# If you want to install rclone command, please visit website:

# https://rclone.org/downloads/

rclone_upload_to_gdirve() {

if ${RCLONE_FLG} && ${RCLONE_COMMAND}; then

[ -z "${RCLONE_GDRIVE_NAME}" ] && log "Error: RCLONE_GDRIVE_NAME can not be empty!" && return 1

log "Tranferring backup file: ${OUT_FILE} to Google Drive"

rclone copy ${OUT_FILE} ${RCLONE_GDRIVE_NAME}:/${RCLONE_FOLDER} >> ${LOGFILE}

if [ $? -ne 0 ]; then

log "Error: Tranferring backup file: ${OUT_FILE} to Google Drive failed"

return 1

fi

log "Tranferring backup file: ${OUT_FILE} to Google Drive completed"

fi

}

# Get file date

get_file_date() {

#Approximate a 30-day month and 365-day year

DAYS=$(( $((10#${YEAR}*365)) + $((10#${MONTH}*30)) + $((10#${DAY})) ))

unset FILEYEAR FILEMONTH FILEDAY FILEDAYS FILEAGE

FILEYEAR=$(echo "$1" | cut -d_ -f2 | cut -c 1-4)

FILEMONTH=$(echo "$1" | cut -d_ -f2 | cut -c 5-6)

FILEDAY=$(echo "$1" | cut -d_ -f2 | cut -c 7-8)

if [[ "${FILEYEAR}" && "${FILEMONTH}" && "${FILEDAY}" ]]; then

#Approximate a 30-day month and 365-day year

FILEDAYS=$(( $((10#${FILEYEAR}*365)) + $((10#${FILEMONTH}*30)) + $((10#${FILEDAY})) ))

FILEAGE=$(( 10#${DAYS} - 10#${FILEDAYS} ))

return 0

fi

return 1

}

# Delete Minio's old backup file

delete_minio_file() {

local FILENAME=$1

if ${DELETE_REMOTE_FILE_FLG} && ${RCLONE_COMMAND}; then

rclone ls ${RCLONE_MINIO_NAME}:${RCLONE_BUCKETS_NAME}/${RCLONE_FOLDER}/${FILENAME} 2>&1 > /dev/null

if [ $? -eq 0 ]; then

rclone delete ${RCLONE_MINIO_NAME}:${RCLONE_BUCKETS_NAME}/${RCLONE_FOLDER}/${FILENAME} >> ${LOGFILE}

if [ $? -eq 0 ]; then

log "Minio's old backup file: ${FILENAME} has been deleted"

else

log "Failed to delete Minio's old backup file: ${FILENAME}"

fi

else

log "Minio's old backup file: ${FILENAME} is not exist"

fi

fi

}

# Delete Google Drive old backup file

delete_gdrive_file() {

local FILENAME=$1

if ${DELETE_REMOTE_FILE_FLG} && ${RCLONE_COMMAND}; then

rclone ls ${RCLONE_GDRIVE_NAME}:/${RCLONE_FOLDER}/${FILENAME} 2>&1 > /dev/null

if [ $? -eq 0 ]; then

rclone delete ${RCLONE_GDRIVE_NAME}:${RCLONE_FOLDER}/${FILENAME} >> ${LOGFILE}

if [ $? -eq 0 ]; then

log "Google Drive old backup file: ${FILENAME} has been deleted"

else

log "Failed to delete Google Drive old backup file: ${FILENAME}"

fi

else

log "Google Drive old backup file: ${FILENAME} is not exist"

fi

fi

}

# Clean up old file

clean_up_files() {

cd ${LOCALDIR} || exit

if ${ENCRYPTFLG}; then

LS=($(ls *.enc))

else

LS=($(ls *.tgz))

fi

for f in ${LS[@]}; do

get_file_date ${f}

if [ $? -eq 0 ]; then

if [[ ${FILEAGE} -gt ${LOCALAGEDAILIES} ]]; then

rm -f ${f}

log "Old backup file name: ${f} has been deleted"

delete_minio_file ${f}

delete_gdrive_file ${f}

fi

fi

done

}

# Main progress

STARTTIME=$(date +%s)

# Check if the backup folders exist and are writeable

[ ! -d "${LOCALDIR}" ] && mkdir -p ${LOCALDIR}

[ ! -d "${TEMPDIR}" ] && mkdir -p ${TEMPDIR}

log "Backup progress start"

check_commands

mysql_backup

start_backup

log "Backup progress complete"

log "Upload progress start"

rclone_upload_to_nas

rclone_upload_to_gdirve

log "Upload progress complete"

log "Cleaning up"

clean_up_files

ENDTIME=$(date +%s)

DURATION=$((ENDTIME - STARTTIME))

log "All done"

log "Backup and transfer completed in ${DURATION} seconds"

如果你看过官方的就能看出来,我对所以ftp相关的方法进行了删除,然后添加了一个RCLONE_BUCKETS_NAME用于来区分RCLONE_FOLDER.

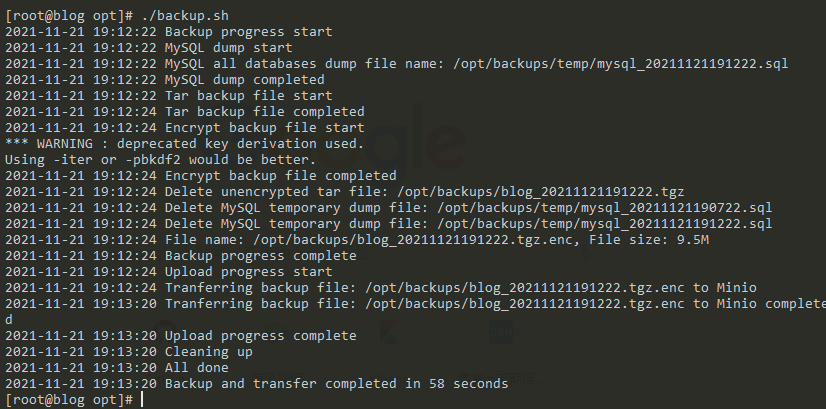

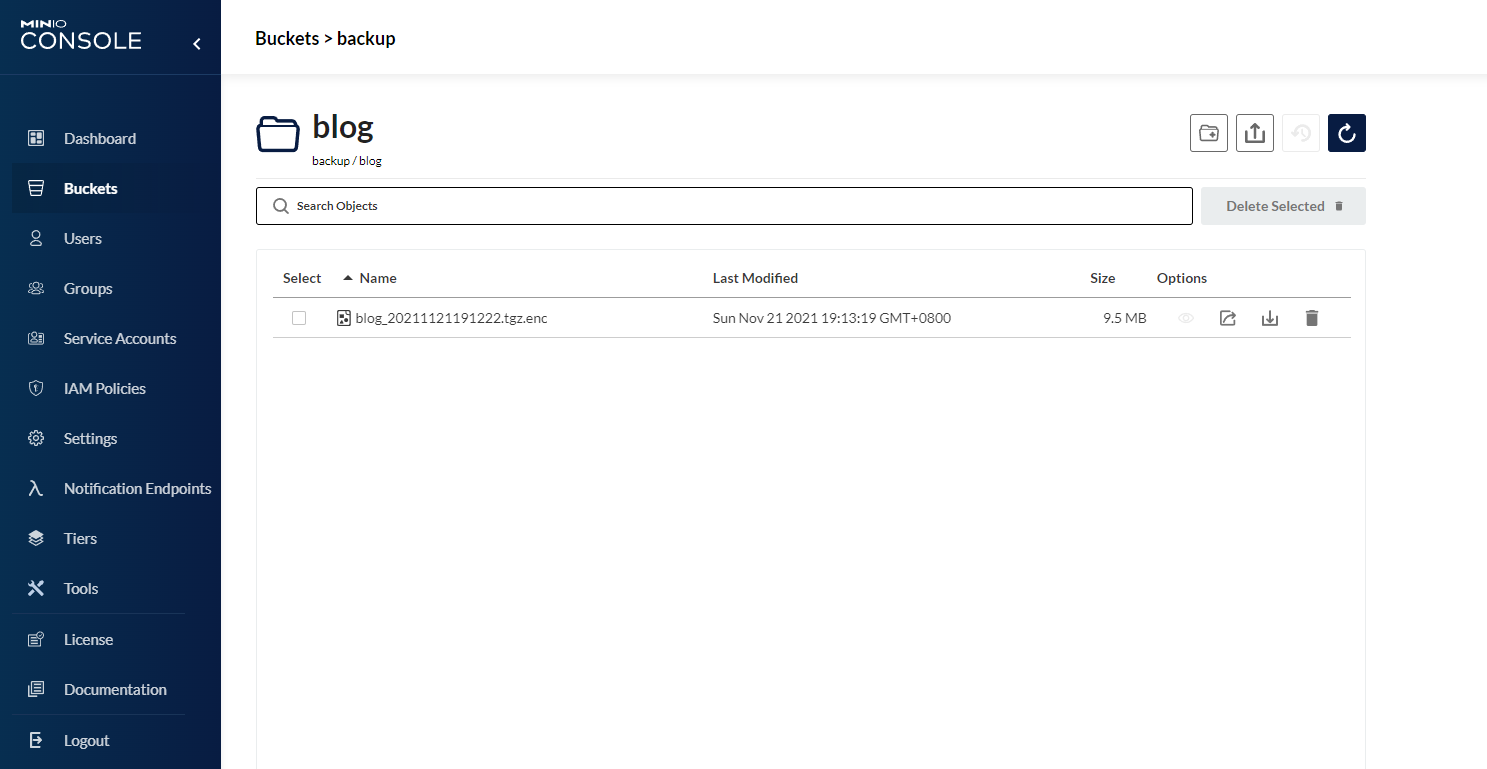

配置完成后验证以下上传结果.

可以看到已经回传到本地的Nas,然后对这个backup.sh加个定时任务即可完成自动备份.

如何解密恢复

备份文件解密命令如下:

openssl enc -aes256 -in [ENCRYPTED BACKUP] -out decrypted_backup.tgz -pass pass:[BACKUPPASS] -d -md sha1备份文件解密后,解压命令如下:

tar 压缩文件默认都是相对路径的。加个 -P 是为了 tar 能以绝对路径压缩文件。因此,解压的时候也要带个 -P 参数。

tar -zxPf [DECRYPTION BACKUP FILE]后续

现在也只是将数据备份到了本地Nas和使用了DigitalOcean的备份服务,万一哪天这俩都挂了也要GG,所以后续准备有时间,在改一下backup.sh脚本,在让其使用rclone命令备份到另外一处,至少完成3-2-1备份法则中的3份数据副本的原则。

数据无价,且用且珍惜.....