最近看了Python的PEP8 ,其中对于代码规范的要求是注释主要选择英文,本次项目的进行中也在竭力的进行,但是发现效果并不是很好,如果为了别人的代码可读性的标准而导致自己的代码低效,这个显然是不可取的。never mind,本章主要介绍CNN届的Hello world————手写数字识别。 自己从无到有的敲一下。

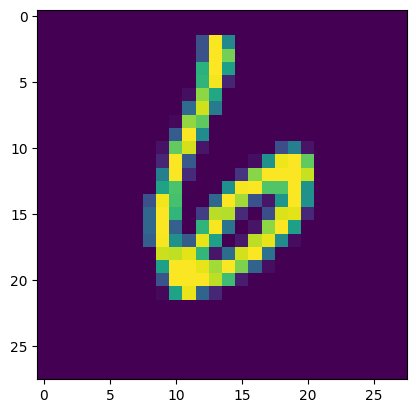

MNIST(modifed national institute of standard and technology) is the “Hello world” dataset of computer vision. Since its release in 1999, this classic dataset of handwritten images has served as the basis for benchmarking classficiation algorithms. As new machine learning techniques emerge, MNIST remains a reliable resource for resource for researchers and leaaners alike.

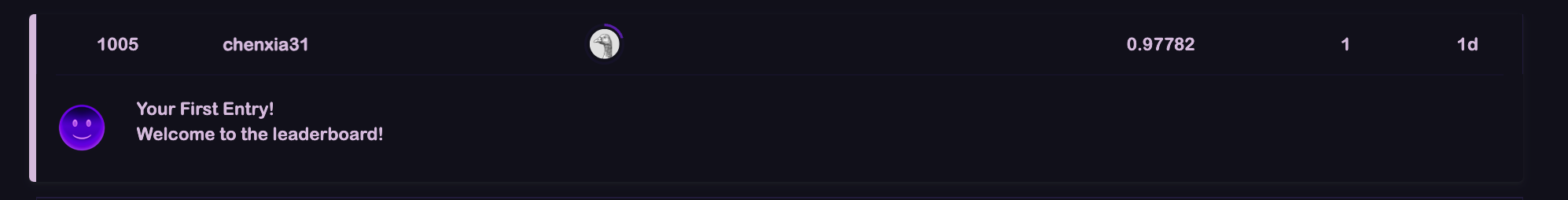

In this competition, your goal is to correctly identify digits from a dataset from a dataset of tens of thousands of handwritten images. We have curated a set of tutorial-style kernels which cover everything from regression to neural networks. We encourage you to experiment with different algorithms to learn first-hand works well and how techique compare.

1 2 3 4 5 6 7 8 9 10 import numpy as np import pandas as pd import matplotlib.pyplot as plt import torchimport torch.nn as nnfrom torch.utils.data import DataLoaderfrom sklearn.model_selection import train_test_splitimport torchvision.transforms as transformsfrom torch.utils.data import Dataset'mps' )

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 '../DigitRecongnier/data/train.csv' )'label' ].values'label' ],axis=1 )0.2 ,random_state=42 )255 255 class TensorDatasetSelf (Dataset ):''' tensor dataset 继承 dataset 只需要重载len和getitem就可以''' def __init__ (self,features,target,transform=None ) -> None :super ().__init__()def __len__ (self ):return self.datatensor.size(0 )def __getitem__ (self, index ):1 ,28 ,28 ))return X,self.labeltensor[index]

Hint01 : torch构建dataset的几种方式

自定义Dataset类,可以通过继承torch.utils.data.Dataset来实现,需要重写__len__(),getitem ()类 使用tensorDataset类,是一个包装器类,实现从tensor到dataset的直接的转变 Hint02 Dataset中添加数据预处理

我们输入features、labels进入dataset类之后,在输出的时候我们可能希望对其进行一些预处理

比如图像增强常用的预处理、增强、旋转等等

from torchvision import transforms

transforms.compose()类似pipeline,来实现一系列的transform操作,常见的transform操作有:

transforms.ToTensor() 自定义transforms 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 128 20 False )False )for X,y in train_iter:print (X.shape)0 ][0 ]break

经典的CNN方面的论文,无论他最后需要实现的有多复杂,在深度学习的pipeline中,只是占据着模型构建的角色。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 class LeNet (nn.Module):def __init__ (self ):'''input :28*28,output:10''' super ().__init__()1 , out_channels=16 , kernel_size=5 , stride=1 , padding=0 )2 )16 , out_channels=32 , kernel_size=5 , stride=1 , padding=0 )2 )32 * 16 , 10 ) def forward (self, x ):0 ), -1 )return out

1 loss = nn.CrossEntropyLoss()

1 2 learning_rate = 0.03

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 for epoch in range (epoches):for X,y in train_iter:

1 2 3 4 5 6 7 8 9 10 valDF = pd.read_csv('../DigitRecongnier/data/test.csv' )float ,device=device)1 ,1 ,28 ,28 ))1 )1 ,28001 ))'cpu' )'ImageId' :index,'Label' :label})'../DigitRecongnier/data/sample_submission.csv' ,index=False )